What if filmmakers could place viewers directly into a scene? Blackmagic and Vision Pro immersive format offer exactly that. The Apple Vision Pro and Blackmagic’s URSA Cine Immersive camera represent a leap into spatial cinema.

We explored this breakthrough—from capture to color grading—to understand how this new workflow empowers filmmakers to build immersive stories.

Unmatched Capture Power with Blackmagic Vision Pro

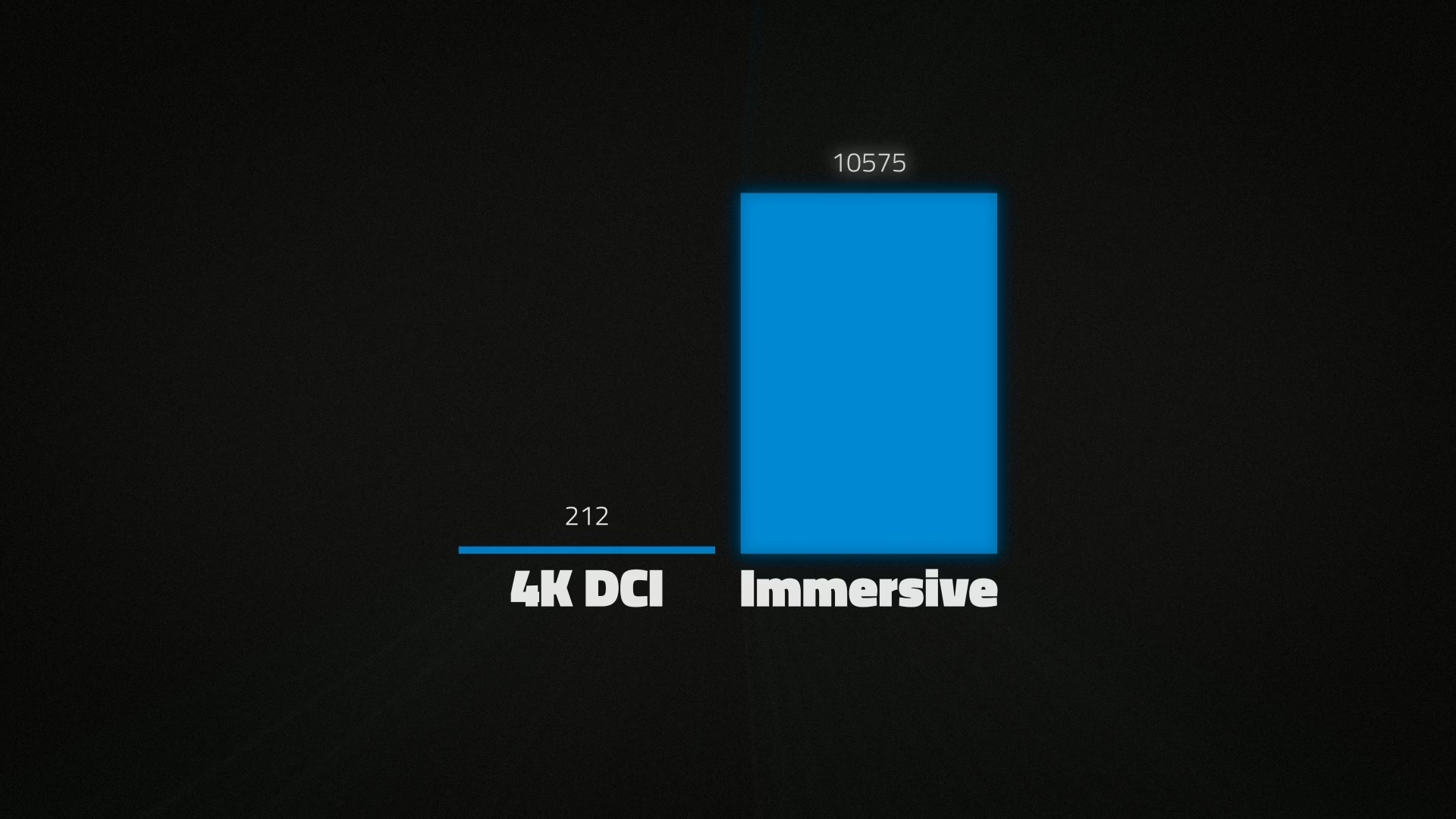

The URSA Cine Immersive uses dual 8K sensors running at 90fps. By comparison, a 4K camera at 24fps handles 212 million pixels per second. The URSA processes over 10 billion pixels per second, enabling true realism.

Understanding Apple Vision Pro as a Cinema Platform

The Vision Pro is Apple’s spatial computing device. It transforms entertainment by delivering video in a 180-degree, stereoscopic immersive format. Unlike traditional screens, it makes users feel physically present in the scene.

Its display packs unmatched pixel density. One iPhone 16 pixel equals 54 Vision Pro pixels. This precision eliminates visible pixelation, maintaining immersion.

Also, immersive video targets 90fps—higher than the 70–80fps threshold of human perception—ensuring motion feels lifelike.

A Perfect Match: URSA Cine Immersive + Vision Pro

Apple Immersive Video requires much more data than standard formats. Compared to traditional 4K DCI, it involves 5x more pixels, 3x the frame rate, and stereo imagery. That equals over 30x the data rate.

Earlier stereo workflows used dual-camera rigs with complex file syncing, projection, and frequent quality loss. Blackmagic simplifies this with the URSA Cine Immersive and DaVinci Resolve. Together, they reduce steps and preserve fidelity.

How Immersive Capture Works

The URSA Cine Immersive captures separate fisheye images for each eye—referred to as “Lens Space.” Unlike typical 180°/360° systems, Apple retains this format all the way to the final playback.

Why? Lens Space minimizes optical compression at the image center—where the eye focuses most. Converting to formats like Equirectangular reduces efficiency and adds distortion.

Preserving Lens Space avoids re-encoding loss and allows the Vision Pro to handle reprojection natively. This preserves clarity where it matters most.

Metadata Drives Seamless Playback

To ensure perfect stereo alignment, each camera unit embeds factory calibration as metadata in the Blackmagic RAW file. This metadata is read by DaVinci Resolve and exported with the immersive file.

Every clip includes its own calibration metadata. During playback, the Vision Pro dynamically unwraps and reprojects each one—automatically and precisely.

This automation eliminates the tedious syncing and manual correction of older 3D workflows.

Filmmakers’ Perspective on the Workflow

The immersive result defies easy description—you need to experience it. Apple offers in-store demos of Vision Pro for those curious.

This isn’t just a technological shift; it’s a creative revolution. Like the cut or sound sync in cinema history, immersive formats demand fresh storytelling techniques.

Traditional 2D approaches won’t fully work. New cinematic grammar is emerging—possibly shaped by you.